The students developed a software program that analyzes workers in an industrial setting to help improve safety, streamline operations and integrate robotic assistance.

Predicting the future might involve a fortune teller or a time machine, and only in the movies at that. But for one group of students at UCF’s Center for Research in Center Vision (CRCV), foretelling what happens next is just a part of what they have been exploring in their research.

CRCV graduate students Jyoti Kini and Ishan Dave, along with undergraduate student Sarah Fleisher, beat out five other university teams in a contest that tasks competitors with predicting human behavior in an industrial setting. The group placed first in the Multimodal Action Recognition on the Meccano Dataset competition, held at the 22nd International Conference on Image Analysis and Processing (ICIAP).

The event was designed to challenge students with finding ways to envision a safer future, streamline operations and integrate the use of robotics in a factory setting.

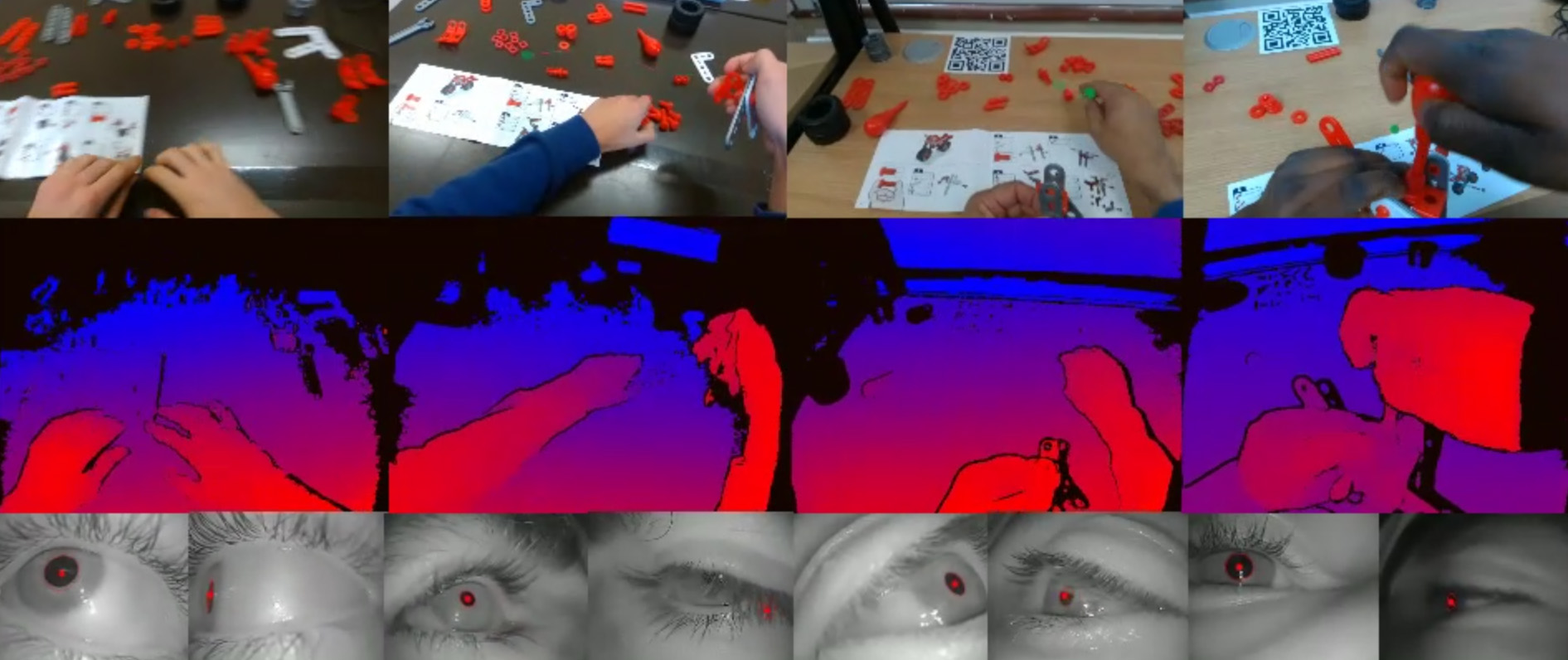

To mimic an industrial environment, each team was given a Meccano dataset, a collection of videos providing first-person or egocentric data from 20 people assembling a toy motorbike from a Meccano model construction kit. The videos served as a simplified microcosm of a factory line, recording 20 subjects outfitted with a head-mounted camera as they worked with two tools, 49 motorbike pieces and an instruction manual.

The cameras captured multi-modal data, including gaze signals that recorded where the subject was looking, RGB signals that captured shapes and colors, and depth signals that tracked how near or far an object was from the subject.

“When you combine these signals, a computer can make predictions about actions,” Kini says. “For instance, if it detects a person with RGB, looking at a door tracking gaze, and that door is relatively close from reading depth, it might predict that the person is going to walk through that door.”

To complete the challenge, the CRCV students developed software that predicted human behavior by analyzing the vast amounts of information from the Meccano dataset, including tens of thousands of video segments, tagged images and annotated text. They submitted their findings to the contest organizers, then presented their report virtually during ICIAP.

Fleisher said their findings can be applied in real-world industrial environments to minimize product defects or further streamline processes.

“The skills used in the software can apply in industry settings as they can be utilized to improve the overall manufacturing process with the aid of wearable devices,” she says. “Workers can wear these devices to oversee the actions performed through the egocentric viewpoint to provide feedback during the manufacturing process.”

Kini said data like this is invaluable in studying human behavior in an industry setting, opening the door for myriad research opportunities.

Written by Bel Huston | Feb. 12, 2024